Hi. I'm Derek.

International Search Consultant and Affiliate Marketer.

I spent ten years running SEO for two of the world's most visited websites. In 2020, I quit to focus on growing my own projects.

I now live a life of my own design.

my projects

What I'm Working On

Digital Real Estate

My core business is affiliate marketing. I drive leads to merchant partners with SEO. I build and rank websites using my own bespoke system. My niche site portfolio spans many of the Internet's most competitive verticals.

Affiliate Fast Track

Affiliate Fast Track is a self-directed training program for people who want to get into affiliate marketing. I developed a step-by-step roadmap and toolkit for launching your own passive income generating affiliate sites.

Nexus Mastermind

Nexus is my coaching and mentorship program. Access support, exclusive training and a community of like-minded entrepreneurs pursuing financial freedom through online business.

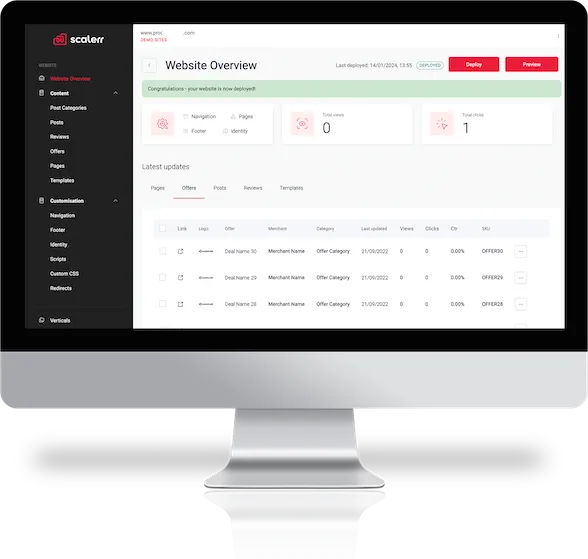

Scalerr CMS

Proprietary Platform for Members of Affiliate Fast Track & Nexus Mastermind. Site builder and offer management system. Build, manage and scale your network, all from one dashboard.

INKSY

INKSY is a technology start-up I co-founded. We leverage computer algorithms and generative AI to create a range of experiential and made for you Art.

Contact

Feel free to get in touch

Copyright 2024 | DerekDevlin.com | Terms | Privacy | Cookies

mail: support@scalerr.co